In-Consumption Social Listening with Moment-to-Moment Unstructured Data

Major video and live streaming platforms in China have recently introduced a live commenting feature that allows viewers to post comments in real time during video content consumption. Building on the rich live comment data, this research proposes a novel approach for in-consumption social listening to extract live consumption experience. The approach is demonstrated in the context of online movie watching with a novel measure, moment-to-moment synchronicity (MTMS), to capture consumers’ in-consumption engagement. MTMS refers to the synchronicity between temporal variations in the volume of live comments and those in movie content mined from unstructured video, audio, and text data from movies (i.e., camera motion, shot length, sound loudness, pitch, and spoken lines). We show that MTMS strongly predicts viewers’ post-consumption appreciation of movies, and it can be evaluated at finer level to identify engaging content.

Live Commenting and In-Consumption Social Listening

During the recent dramatic growth of the on-demand video segment in China, major online video platforms, such as Youku, Tencent Video, and iQiyi Video, have all introduced a live commenting function to engage consumers in nearly all video categories. Figure 1 illustrates the basic format of this function. The live commenting function enables viewers to post comments through an interactive box under the video player while streaming a video. These live comments are recorded in sync with video content; that is, each of them is attached to a specific time in the video. When another viewer watches the video later, these comments will appear on the upper side of the screen at the same moment in the video when the commenters posted them. In this way, the viewer can see and reply to previous viewers’ comments. (To better illustrate this commenting function, we provide a YouTube video about the format of live comments and how to post them, which is available at https://youtu.be/W7Vw6gPWmQQ). Video content such as movies and TV shows attracts mass viewer participation in generating live comments. For example, as of February 2017, the first episode of a popular Chinese TV show on Tencent Video, Ode to Joy II (2017), has received over 2.2 million live comments—50 times the volume of its post-show comments.

Figure 1: An Example of Live Social Media Function on Online Video Platforms

These live social media activities (i.e., live comments) document the viewers’ (moment-to-moment) MTM reactions towards the video content and may reveal valuable information about their real-time consumption experience. In our research, we propose in-consumption social listening, and develop a novel measure to listen to live consumer experiences during video content consumption. The measure utilizes the unstructured information contained in the live comments (textual information) as well as the corresponding video content (image and audio information) and can be evaluated at the MTM level to track consumer engagement during a video. It then allows online video platforms to improve managerial decisions on the placement of in-video advertisements and content editing for sequentially released TV and reality shows. In the context of live streaming, the proposed measure can be constructed and analyzed during broadcasting and provide timely feedback on viewer engagement to the content providers.

Our analysis of live comments also relates to the growing interest among marketers in the collection and analysis of MTM data that document real-time consumer reactions. For example, marketing research companies like Nielsen adopted a variety of technologies such as functional magnetic resonance imaging (fMRI), electroencephalogram (EEG), eye and face tracking, and biometrics. These MTM data have broad and important implications in the design of product content and ads (Baumgartner, Sujan, and Padgett 1997; Teixeira, Wedel, and Pieters 2010, 2012; Woltman Elpers, Wedel, and Pieters 2003), understanding in-consumption drivers of product evaluations (Ramanathan and McGill 2007), as well as forecasting demand of products such as TV shows and movies (Barnett and Cerf 2017; Liu, Singh, and Srinivasan 2016; Seiler, Yao, and Wang 2017). Importantly, compared with traditional methods, this research not only introduces a new form of field MTM data—live comments that allow for large-scale low-cost analyses of consumption experience—but also offers a new perspective to analyzing MTM data that are highly unstructured in nature.

How We Listen to Live Consumer Experience

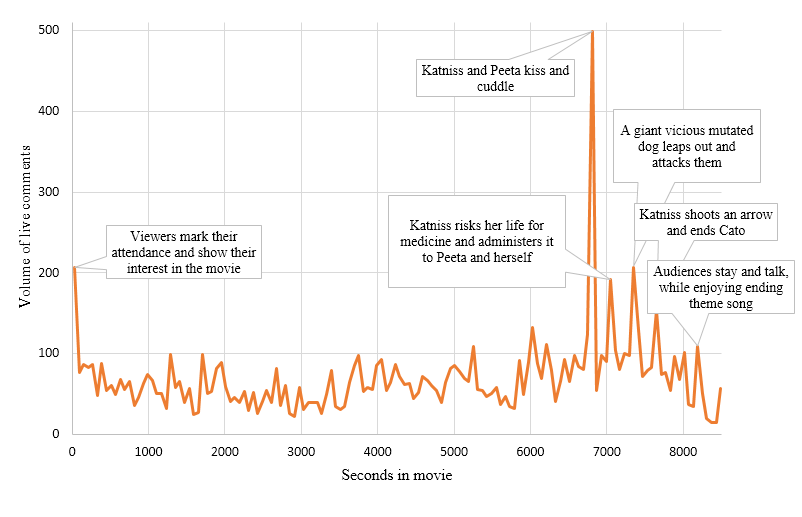

Existing social listening approaches often build on the analysis of the textual information contained in consumers’ social media posts. However, unlike other social media activities, consumers may not directly reveal their preference for the content in live comments; rather viewers use short and informal text that contains fragmented sentences, words, or emoticons (e.g., “The evil boss is bad” or “OMG”). These features limit the information that can be extracted with natural language processing tools. We therefore focus on the key feature of live comments (and MTM data in general) that they are real-time consumer reactions to product content. Following this, the volume of live comments during a movie seems to reflect the evolution of the movie content (See Figure 2 for an example).

Figure 2: Distribution of Volume of Live Comments in the Hunger Games (2012)

We propose a novel measure called Moment-to-Moment Synchronicity (MTMS). MTMS refers to the synchronicity between temporal variations in the content of consumption and MTM consumer reactions to such variations. In movies and TV shows, producers often set up a series of temporal variations in the product content, such as motion, music, and development of the story, to engage the viewers. When consumers are engaged, their live posts are likely to follow these content variations (Dellarocas and Narayan 2006). In contrast, if they are bored, they may direct attention to top-of-mind cues, and their live reactions are unlikely to follow the content variations (Berger and Schwartz 2011; Berger 2014). The synchronicity between live comments and movie content reflects the ability of a product to engage the viewers.

Movie and TV producers have long recognized the importance of engaging the audience. For example, when explaining how to control the active participation of the audience, Alfred Hitchcock described an example scene in which two people are sitting at a table under which a bomb is ticking (Truffaut 1984). This creates a fascinating circumstance in which the viewers are aware of the bomb, but the two characters are not. The audience members are longing to warn the characters on the screen, “You shouldn’t be talking about such trivial matters. There is a bomb beneath you and it is about to explode!” Achieving such delicate control of the viewers’ participation requires careful manipulation of a movie’s content, including the plot, shots, and sounds. Fundamentally, MTMS captures the MTM information transmission from the content producer to the consumers. In this sense, this concept applies to other entertainment consumption and other type of MTM consumer reactions.

Video Analytics and Content Production

The key challenge for building MTMS is that data on the product content are highly unstructured. In our empirical examination, we carry out our analysis for over 500 online movie videos. The movie videos are a combination of unstructured, high-frequency data streams (image sequence, audio, and text), with a combined run time of over one month. In addition, movies are complex products in which meanings of scenes are context dependent. Consequently, quantifying movie content from the video data is challenging.

We tackle these difficulties by following “film grammar,” the grammatical principles generally followed by professional filmmakers (Adams, Dorai, and Venkatesh 2003; Arijon 1976). For instance, in film grammar, a shot, the basic component of a movie, is a continuous camera action, and shot length is often manipulated to surprise or emphasize. Therefore, the length of shots during different scenes of a movie can reveal which parts are intended to shock or surprise the viewers. Following similar principles and applying a set of scalable video processing tools, we capture MTM variations in movie content by quantifying camera motion, shot length, sound loudness, sound pitch, and number of spoken lines.

These content variables are main dimensions that the producers manipulated in a movie to engage the viewers. It is first consistent with supply-side variations of MTMS. Second, using film grammar enables us to focus on key dimensions of the movie content, which dramatically simplifies the video processing. And it takes about one fourth of the playtime to process the videos with a desktop PC. Video platforms with higher computing power can process them even faster.

Empirical findings on MTMS

In our empirical analysis (inspired by Morck et al. 2000), we operationalize a movie’s MTMS using the R-squared value from a regression of the amount of live comments onto movie content quantified from the videos. Our analysis reveals that the MTMS measure significantly predicts movie evaluations. The more pronounced effect of MTMS among horror and thriller movies provides further support for MTMS’s role in capturing viewers’ content engagement.

In addition, we show further that the MTMS effect is sizable and comparable to the star actor effect; it is robust to different specifications of the model; and MTMS captures a unique source of in-consumption information that cannot be captured by existing measures that build on MTM volume of comments or textual information in the comments.

Finally, MTMS is also capable of looking at different consumer reactions and time periods of an experience. For instance, our text analysis of live comments reveals that the effect of MTMS is mainly driven by comments that explicitly mention the movie’s content (e.g., plot, characters, acting, visual and audio effects). We also find that MTMS during the movie’s ending period contributes the most to its overall evaluations, relative to earlier periods.

[Qiang Zhang, Hong Kong University of Science and Technology; Wenbo Wang, Hong Kong University of Science and Technology; Yuxin Chen, New York University (NYU), and NYU Shanghai.]

References

Arijon, D. (1976). Grammar of the film language. https://www.amazon.com/Grammar-Film-Language-Daniel-Arijon/dp/187950507X

Adams, B., Dorai, C., & Venkatesh, S. (2002). Toward automatic extraction of expressive elements from motion pictures: Tempo. IEEE Transactions on Multimedia, 4(4), 472-481. https://ieeexplore.ieee.org/document/1176945

Barnett, S. B., & Cerf, M. (2017). A ticket for your thoughts: Method for predicting content recall and sales using neural similarity of moviegoers. Journal of Consumer Research, 44(1), 160-181.

https://academic.oup.com/jcr/article/44/1/160/2938969

Baumgartner, H., Sujan, M., & Padgett, D. (1997). Patterns of affective reactions to advertisements: The integration of moment-to-moment responses into overall judgments. Journal of Marketing Research, 219-232.

https://www.jstor.org/stable/3151860?seq=1#metadata_info_tab_contents

Berger, J. (2014). Word of mouth and interpersonal communication: A review and directions for future research. Journal of Consumer Psychology, 24(4), 586-607.

https://www.sciencedirect.com/science/article/pii/S1057740814000369

Berger, J., & Schwartz, E. M. (2011). What drives immediate and ongoing word of mouth? Journal of Marketing Research, 48(5), 869-880.

http://journals.ama.org/doi/10.1509/jmkr.48.5.869

Dellarocas, C., Narayan, R. (2006). A statistical measure of a population’s propensity to engage in post-purchase online word-of-mouth. Statistical Science, 21(2), 277-285.

https://arxiv.org/pdf/math/0609228.pdf

Liu, X., Singh, P. V., & Srinivasan, K. (2016). A structured analysis of unstructured big data by leveraging cloud computing. Marketing Science, 35(3), 363-388.

https://pubsonline.informs.org/doi/abs/10.1287/mksc.2015.0972

Morck, R., Yeung, B., & Yu, W. (2000). The information content of stock markets: Why do emerging markets have synchronous stock price movements? Journal of Financial Economics, 58(1), 215-260.

https://www.nber.org/china/shangmorck.pdf

Ramanathan, S., & McGill, A. L. (2007). Consuming with others: Social influences on moment-to-moment and retrospective evaluations of an experience. Journal of Consumer Research, 34(4), 506-524.

https://pdfs.semanticscholar.org/2de8/568cf73b77e7607bdf27d6c85663c905dbda.pdf

Seiler, S., Yao, S., & Wang, W. (2017). Does online word of mouth increase demand?(and how?) Evidence from a natural experiment. Marketing Science, 36, (6), 838-861.

https://pubsonline.informs.org/doi/10.1287/mksc.2017.1045

Teixeira, T. S., Wedel, M., & Pieters, R. (2010). Moment-to-moment optimal branding in TV commercials: Preventing avoidance by pulsing. Marketing Science, 29(5), 783-804.

https://pubsonline.informs.org/doi/abs/10.1287/mksc.1100.0567

Teixeira, T., Wedel, M., & Pieters, R. (2012). Emotion-induced engagement in internet video advertisements. Journal of Marketing Research, 49(2), 144-159.

http://journals.ama.org/doi/pdf/10.1509/jmr.10.0207

Truffaut, F. (1984). Hitchcock. New York: Simon & Schuster. https://www.simonandschuster.com/books/Hitchcock/Francois-Truffaut/9780671604295

Woltman Elpers, J. L., Wedel, M., & Pieters, R. G. (2003). Why do consumers stop viewing television commercials? Two experiments on the influence of moment-to-moment entertainment and information value. Journal of Marketing Research, 40(4), 437-453.

http://www.economicsofattention.com/site/assets/files/1083/teixeira-_et_al-_m2m_optimal_branding.pdf

Zhang, Q., W. Wang, & Y. Chen (2018). In-consumption social listening with moment-to-moment unstructured data: The case of movie appreciation and live comments. Working paper, Hong Kong University of Science and Technology. Available upon requests

Latest

Most Popular

- VoxChina Covid-19 Forum (Second Edition): China’s Post-Lockdown Economic Recovery VoxChina, Apr 18, 2020

- China’s Joint Venture Policy and the International Transfer of Technology Kun Jiang, Wolfgang Keller, Larry D. Qiu, William Ridley, Feb 06, 2019

- China’s Great Housing Boom Kaiji Chen, Yi Wen, Oct 11, 2017

- Wealth Redistribution in the Chinese Stock Market: the Role of Bubbles and Crashes Li An, Jiangze Bian, Dong Lou, Donghui Shi, Jul 01, 2020

- The Dark Side of the Chinese Fiscal Stimulus: Evidence from Local Government Debt Yi Huang, Marco Pagano, Ugo Panizza, Jun 28, 2017

- What Is Special about China’s Housing Boom? Edward L. Glaeser, Wei Huang, Yueran Ma, Andrei Shleifer, Jun 20, 2017

- Privatization and Productivity in China Yuyu Chen, Mitsuru Igami, Masayuki Sawada, Mo Xiao, Jan 31, 2018

- Evaluating Risk across Chinese Housing Markets Yongheng Deng, Joseph Gyourko, Jing Wu, Aug 02, 2017

- How did China Move Up the Global Value Chains? Hiau Looi Kee, Heiwai Tang, Aug 30, 2017

- China’s Shadow Banking Sector: Wealth Management Products and Issuing Banks Viral V. Acharya, Jun Qian, Zhishu Yang, Aug 09, 2017

Facebook

Facebook  Twitter

Twitter  Instagram

Instagram WeChat

WeChat  Email

Email